Since Google open sourced Kubernetes in 2014, it has become one of the most popular open source projects. Adopted by all major cloud providers, Kubernetes has undoubtedly become the de facto container orchestrator.

But what is Kubernetes and container orchestration really about? Without the technical background and knowledge about the technology developments that preceded it, it can be challenging to wrap your head around it.

As a business leader, digital transformation is probably top of mind. Interactions with customers, suppliers, and partners have moved into the digital space. Frictionless, user-friendly, even memorable interactions can translate into a competitive edge. Well, Kubernetes (and containers!) are at the core of the technology stack driving today’s digitalization. While initially mainly adopted by cloud-born organizations, traditional organizations are jumping on the bandwagon and leveraging it too.

Before we get to Kubernetes, let’s take a brief trip through memory lane as we outline the technology developments that led to the so-called cloud native stack.

What Are Bare Metal Machines?

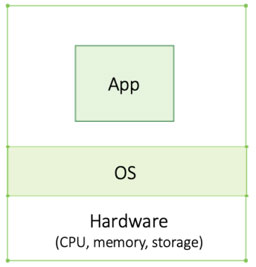

A computer consists of hardware — mainly the central processing unit (CPU), storage, and memory. On top of the hardware runs the operating system (OS), a software layer that manages the underlying hardware resources. Applications such as MS Word and Outlook run on top of the OS which ensures they have enough resources to run smoothly.

The OS ‘abstracts’ the hardware from the applications. This abstraction concept is key in IT — you’ll hear it over and over again. It basically means that the OS acts as a mediator between hardware and apps. Apps don’t need to know anything about the hardware, they just let the OS know how much memory, storage, and compute capacity they need and the OS will make sure the program gets access to the resources when needed. The same applies to you. The OS abstracts all the hardware complexity from you. You only interact with applications and the OS (e.g. request it to launch or close a program) and need not to worry about the hardware specificities.

A bare metal machine is basically a machine with hardware, OS, and application(s) running on top of it. Before virtualization came along, there was no way to separate apps running on the same machine. Apps were running side by side, sharing the same resources. This lack of isolation means that when one app crashed, it could drag a co-located app right down with it — a risk most companies wouldn’t want to take. Hence, they used one bare metal machine per app. Since application resource needs fluctuate, each machine had to have enough resources to allow the app to peak, even if the app would only peak 1% of the time — a very ineffective use of resources.

Virtual Machines: In a Nutshell

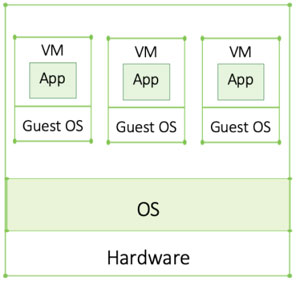

A virtual machine (VMs) is code that wraps around an application pretending to be hardware and isolating it from other applications. The code inside the VM thinks it is in its own individual machine, hence the term ‘virtual’ machine. Just like a physical machine, VMs have an OS, referred to as guest OS. The OS running on the physical machine is the host OS. Whereas a bare metal machine is a computer with no VMs, ‘regular’ machines allow for application co-location significantly increasing efficiencies.

For an application there’s no difference between running on a physical machine or a VM. However, for a system administrator, VMs make day-to-day infrastructure management a lot easier.

Understanding Distributed Applications

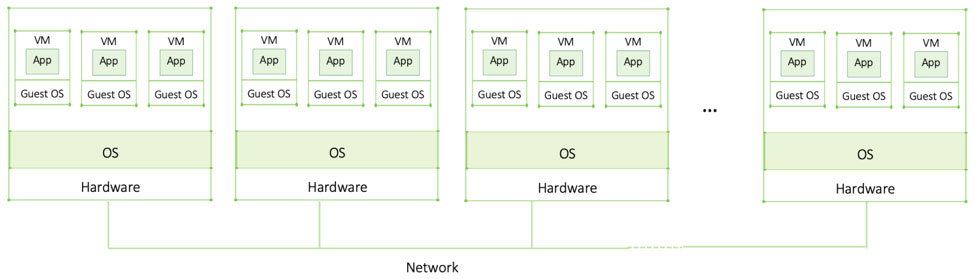

Enterprise applications can be massive and require a lot more resources than a single powerful server can handle. Some may even require to run parallel processes (CPUs can only run a process at the time). To achieve that, multiple machines can be grouped together into a single network forming a distributed system or application. Two main characteristics are:

(1) A group of autonomous physical or virtual machines (2) that function as one coherent system

A collection of machines working together is referred to as a cluster; each machine is called a node. To achieve a common goal, nodes must communicate: status updates, data, and commands are all exchanged over the network. In short, multiple (sometimes geographically dispersed) machines connected over a network become one powerful supercomputer.

Unlike monolithic applications (applications built as one single entity), distributed applications are broken down into different components and placed into VMs. This allows for faster updates, rollouts, and fixes. Additionally, if one component crashes, it won’t affect the entire system.

Containers: The New, More Resource-Efficient VM

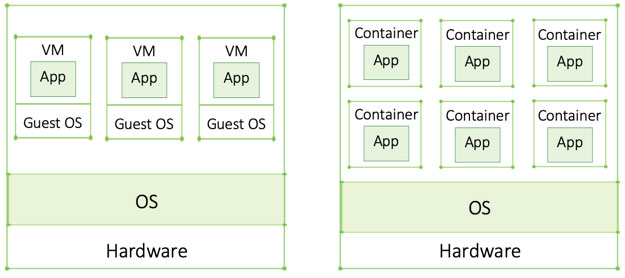

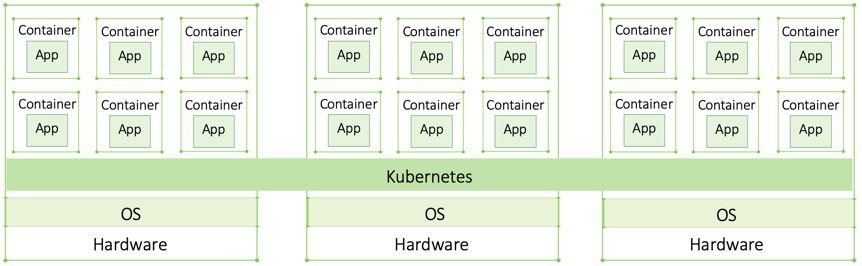

Containers are the next step in the virtualization evolution. Unlike VMs, containers do not require a guest OS and are thus a lot more lightweight. That means, more containers can run on a machine than VMs — yet another efficiency gain. Additionally, containers are portable, meaning they can be moved across environments without the need for additional configuration. Everything the app needs is in the container making it environment-independent.

With VMs, on the other hand, hardware dependencies remain. Each infrastructure has its own virtualization tool making portability more difficult. For instance, on-premise VMs aren’t necessarily compatible with AWS, which has its own unique virtualization tech. Further testing and configuration are also needed. In short, moving VMs between heterogeneous environments isn’t straight forward.

What Are Microservices?

Microservices are applications broken down into even smaller components. These micro-components are called services. Since each microservice needs its own VM or container, microservices only took off after the popularization of containers. More lightweight, they made microservices for the first time feasible. A microservices application relying on VMs only would consume far too many resources.

Placing each microservice in a separate container speeds the building, testing, deploying, debugging, and fixing of components. As you can imagine, debugging a few lines of codes is a lot easier than fixing hundreds of code lines. This modularity also speeds updates, new features rollouts, as well as rollbacks should an error arise.

A Look at Container Orchestrators

If an application was built with hundreds of VMs, it might require thousands of containers once migrated. As you can imagine, managing them all manually is close to impossible. This is where container orchestration, such as Kubernetes, come to play. Container orchestrators manage (or orchestrate) containers ensuring all containers are up and running and automate a lot of the tedious manual work.

The container orchestrator runs across the entire cluster, abstracting the underlying resources (e.g., memory, storage, CPU) from the applications. While there are alternatives to Kubernetes on the market (e.g. Docker Swarm), Kubernetes won the container war. Since 2018, all major cloud providers offer their own Kubernetes solutions.

Kubernetes: What Does It Do?

Open sourced by Google in 2014, Kubernetes adoption has been growing ever since. We know it’s huge, but what is it really?

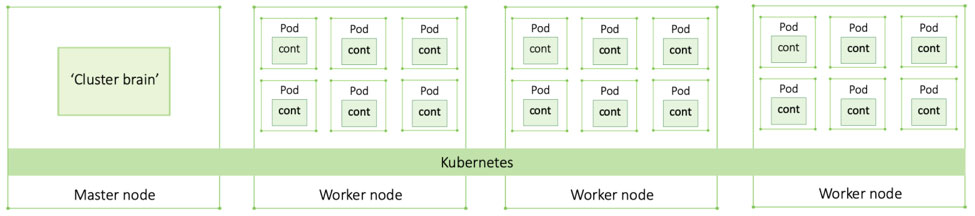

It helps to see Kubernetes as some type of cluster or datacenter OS. It manages the resources of an entire cluster, whether the nodes are on premise or in the cloud. Just like the OS on your laptop, Kubernetes abstracts away the infrastructure so developers don’t have to worry about the underlying resources. They simply develop applications for Kubernetes, and Kubernetes takes care of all the underlying details (e.g. where the app runs, allocate the right amount of resources to each container, etc.).

Master and Worker Nodes

A cluster consists of one or more masters and multiple worker nodes. The containerized applications run on the worker nodes. Each container is placed into a so-called pod, a sandbox-like environment that hosts and groups containers.

The master node is the ‘cluster brain’ managing worker nodes and resources. Through watch loops, it ensures the cluster always matches the declarative state (see below). Generally, no applications, except for cluster specific system apps, run on the master. It’s sole purpose is focused on managing the cluster.

The Kubernetes Difference

Kubernetes brings numerous benefits, but these three are the most powerful:

Declarative state – is at the core of Kubernetes. It means that developers “declare” the desired state of the application. Kubernetes then implements everything according to the declarative state and ensures, through watch loops, that it continues to do so. This capacity enables self-healing and scaling.

Self-healing – This capability is enabled through the declarative model. As just discussed, Kubernetes will detect discrepancies between the current and declared state. Once detected, it will interfere and fix it, or self-heal (e.g. if a pod is down, it will spin up a new one).

Auto-scaling – Kubernetes has the ability to automatically scale workloads up and down. Think Netflix. Each Friday evening, usage peaks. More people want to stream Video and that requires a lot more bandwidth than, let’s say on Tuesday at 2am. Kubernetes has the ability to automatically add more pods and/or nodes to balance the load (spread requests among different machines/processes) so more people can watch Netflix without experiencing buffering issues. After the peak, Kubernetes scales back. That way Netflix only pays for the resources it actually needs.

Kubernetes and Containers are Only One Part of the Puzzle

Now we know why Kubernetes is great. But deploying it in production requires a lot more than just Kubernetes and containers. An entire stack is needed to ensure enterprises have all the capabilities they need (e.g. role-based access control, disaster recovery, logging and monitoring). Configuring and fine-tuning these technologies so they work securely and reliably is no easy task. Additionally, there is a lot more demand for Kubernetes experts than available skills on the market. In fact, projections show that even as more developers are starting to learn that skill, it will still be difficult to catch up with the growing demand.

The complexity of Kubernetes led to an entire ecosystem of Kubernetes solutions. While some address one particular area like Portworks or Liknered, others focus on broader areas such as security, compliance, and operations like Kublr does. Navigating the space can be pretty confusing at first as they all relate to Kubernetes. There are stark differences in terms of capabilities and approaches. It’s worth taking the time to understand the implication of each before adopting a particular tool.

Digital Transformation, Kubernetes, and DevOps: How Are They All Connected?

Kubernetes and DevOps may be murky terms for business leaders, but digital transformation is pretty established. But how do they relate? In short, while business people talk digital transformation, technologists talk DevOps. Let’s add cloud-native to it and break them down.

Cloud Native Stack – Also called the “new stack”, cloud-native is the technology stack behind so-called cloud native applications. Cloud native apps are described as containerized, micro-service oriented, and dynamically orchestrated (aka Kubernetes). Based on this definition, containers and Kuberentes are at the core of the cloud native stack. Although developed for the cloud, the cloud native stack isn’t cloud-bound. Companies can and are increasingly using Kubernetes and containers on-premise or even in air-gapped environment. In fact, many of our clients come to us for that particular use case.

DevOps – Refers to a methodology made possible by cloud-native technologies. DevOps integrates development, IT operations, QA, and security. Whereas previously these were all separated functions, DevOps aims at making them all part of one process. Properly implemented, it translates into reduced cost and better code. It does, however, require a stark cultural shift, and that shift has proven even more difficult than the adoption of the technologies enabling it.

Digital Transformation – The broader term that describes this shift. Fueled by a cloud-native enabled DevOps approach, digital transformation means adopting an agile, software-first approach that allows enterprises to adapt to a rapidly changing business requirements.

We developed this article to bring clarity to Kubernetes for those without a technical background. Have questions or want to share your thoughts? Please comment.