This article is part of a new series providing a high level overview of basic Kubernetes concepts and additional resources for further deep dives.

Open-sourced by Google in 2014, Kubernetes is a container orchestrator. That means it enables users to deploy and manage apps distributed and deployed in containers. It takes care of scaling, self-healing, load-balancing, rolling updates, etc. The project is managed by the Cloud Native Computing Foundation (CNCF) along with multiple other open source projects, and you can find it here on GitHub.

Compatible with Docker, a container runtime, Kubernetes orchestrates hosts running Docker. Docker, on the other hand, handles lower-level tasks ensuring containers are started, stopped, and managed. Containers and Kubernetes complement each other and go hand in hand.

Scalability is huge with Kubernetes. If a legacy app had hundreds of VMs, it will likely have thousands once containerized. That means you’ll need a way to automate tasks. In his book, The Kubernetes Book, Nigel Poulton describes Kubernetes as some sort of datacenter operating system (OS). As he points out, it helps to move away from viewing data centers as a collection of computers, and see them as a single supercomputer instead. Just as the OS abstracts away all the underlying resources (e.g. CPU, RAM, storage,etc.) from the user, Kubernetes abstracts away data center resources — your data center becomes one single resource pool.

Docker, Kubernetes, and Microservices

Docker and Kubernetes are ideal for microservices. While by no means new, these new technologies led to the proliferation of microservices. Microservices are applications broken down into micro-components referred to as services. More lightweight than VMs, a lot more containers can be placed on a single machine than VMs. Hence, containers made breaking apps down into micro-components (each placed in its own container) for the first time feasible.

Master, worker nodes, and pods

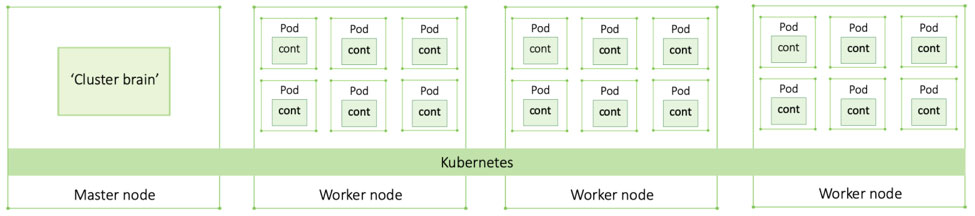

A Kubernetes cluster consists of one or more masters and multiple worker nodes. The master, often referred to as the cluster control plane, manages the cluster, makes all decisions (e.g. where to place which app), monitors it, implements changes, and responds to events. The actual applications run on the worker nodes. Each service (or application component) is placed in a container which in turn is placed in a pod, a sandbox like environment with no other function than hosting and grouping containers.

Declarative model & desired state

The declarative model and the desired state are at the core of Kubernetes. The declarative state means that the developer ‘declares’ the desired state of the application in a manifest file and posts it to the Kubernetes API server where it’s stored. Kubernetes deploys the app according to the desired state and, through watch loops, ensures the state doesn’t deviate. If it does, Kubernetes will intervene and fix it.

Further readings

- What is Kubernetes by kubernetes.io

- The Kubernetes Book

- Docker and Kubernetes: The Big Picture (Pluralsignt course)

- A Closer Look at Kubernetes (for readers with no technical background)

- Kubernetes Primer Infographic (for readers with no technical background)

Help us improve the further reading section by poiting us at your favority resource in the comment section.