Enterprise Platform

Kublr helps enterprises deploy, run and manage reliable, secure

Kubernetes across all environments.

Kubernetes across all environments.

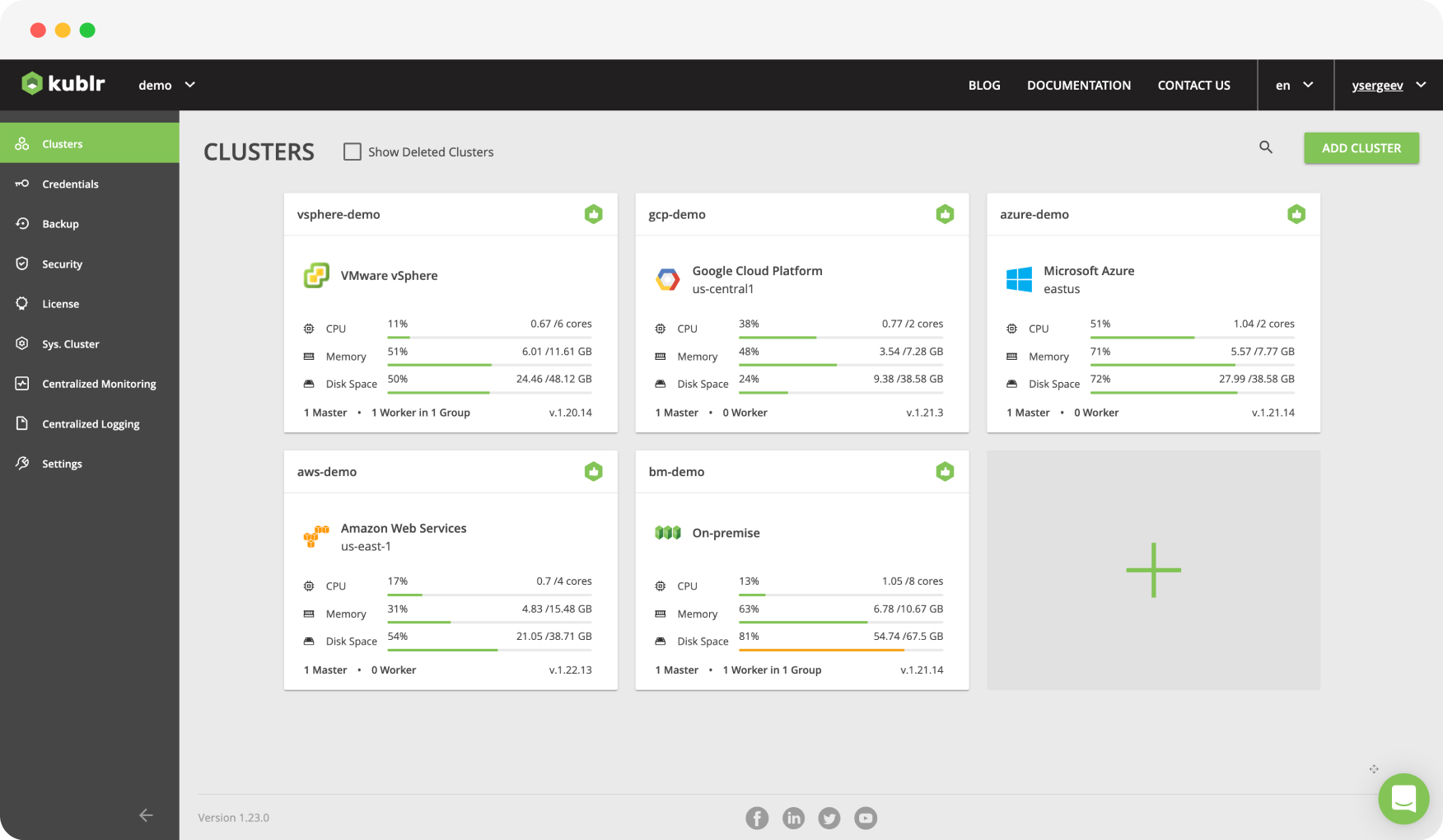

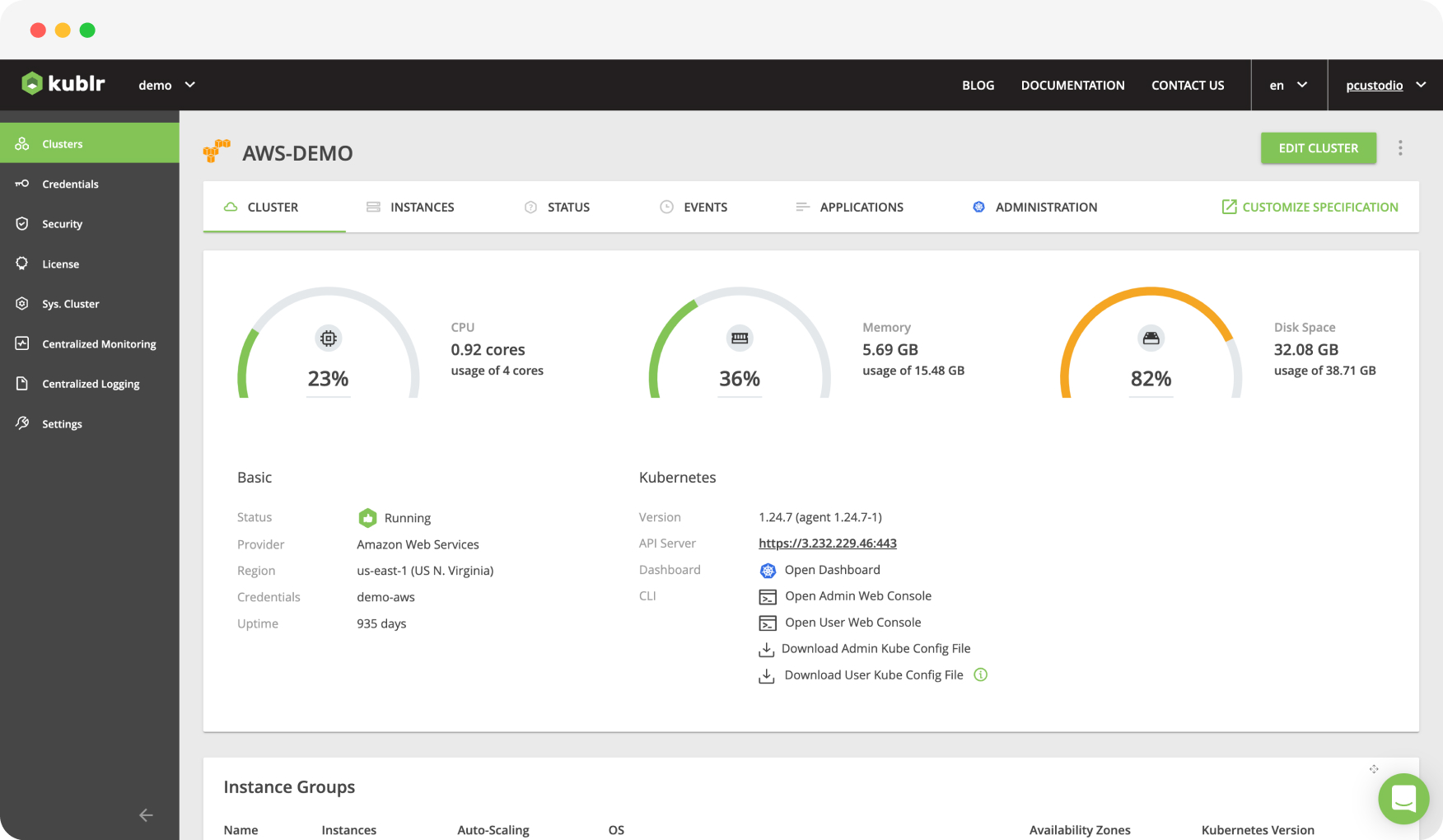

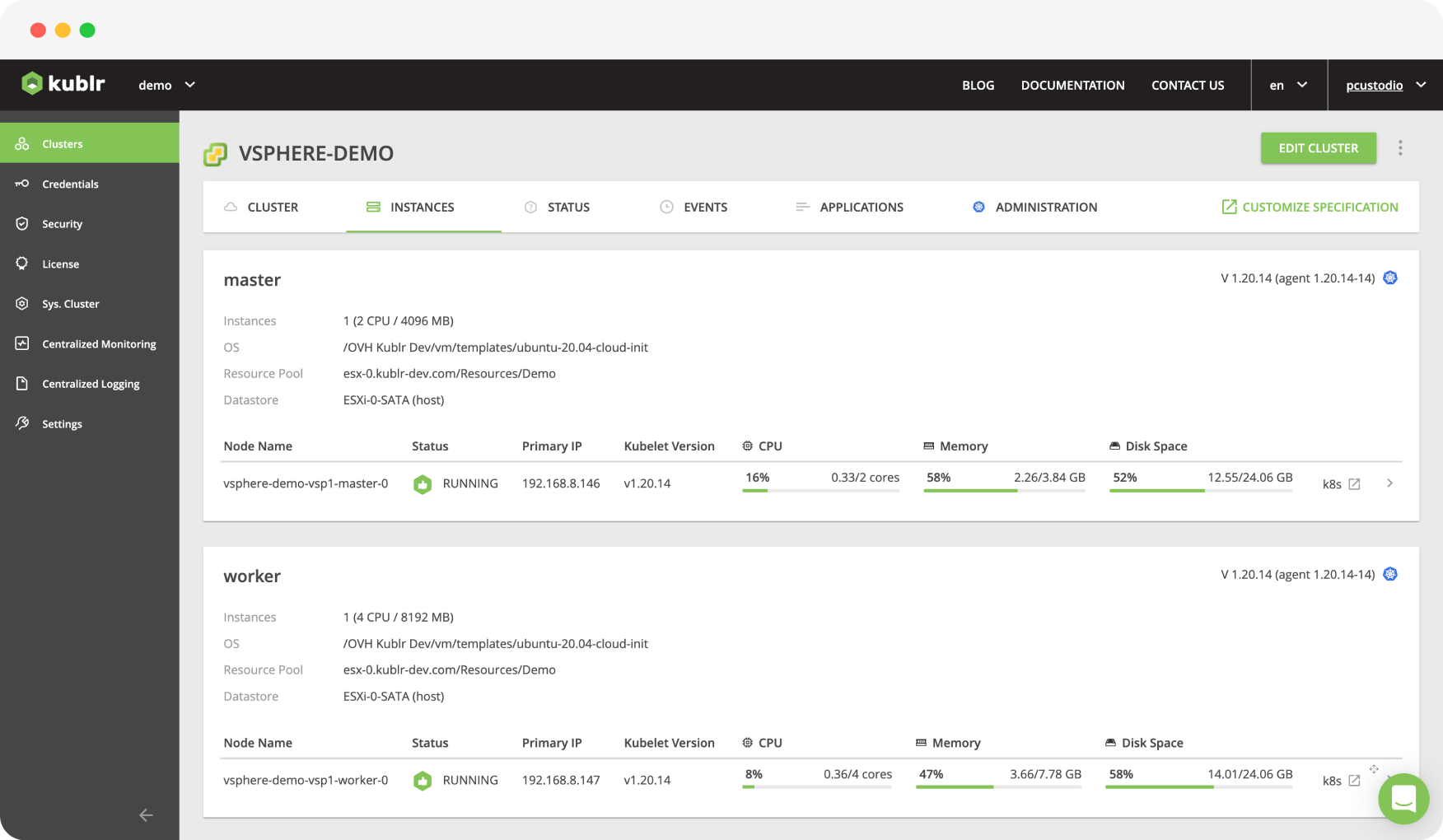

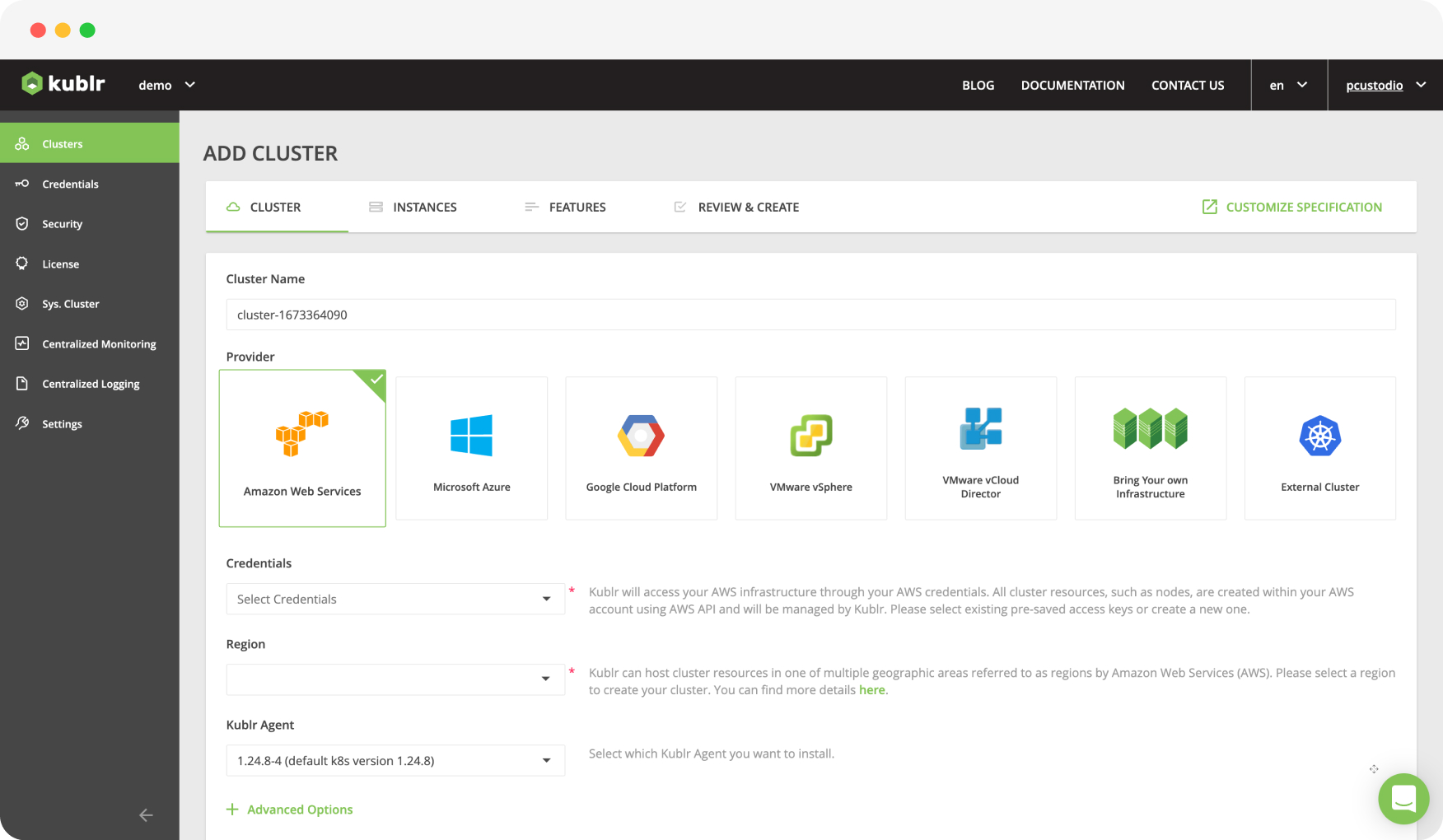

Centralized multi-cluster management

Deploy, run and manage all of your Kubernetes clusters from a single control plane across all of your on-premises, hosted and cloud environments. Kublr integrates with environment-specific infrastructure management APIs to enable easier Kubernetes deployment, greater automation and advanced capabilities like node auto-scaling and disaster recovery, where applicable.

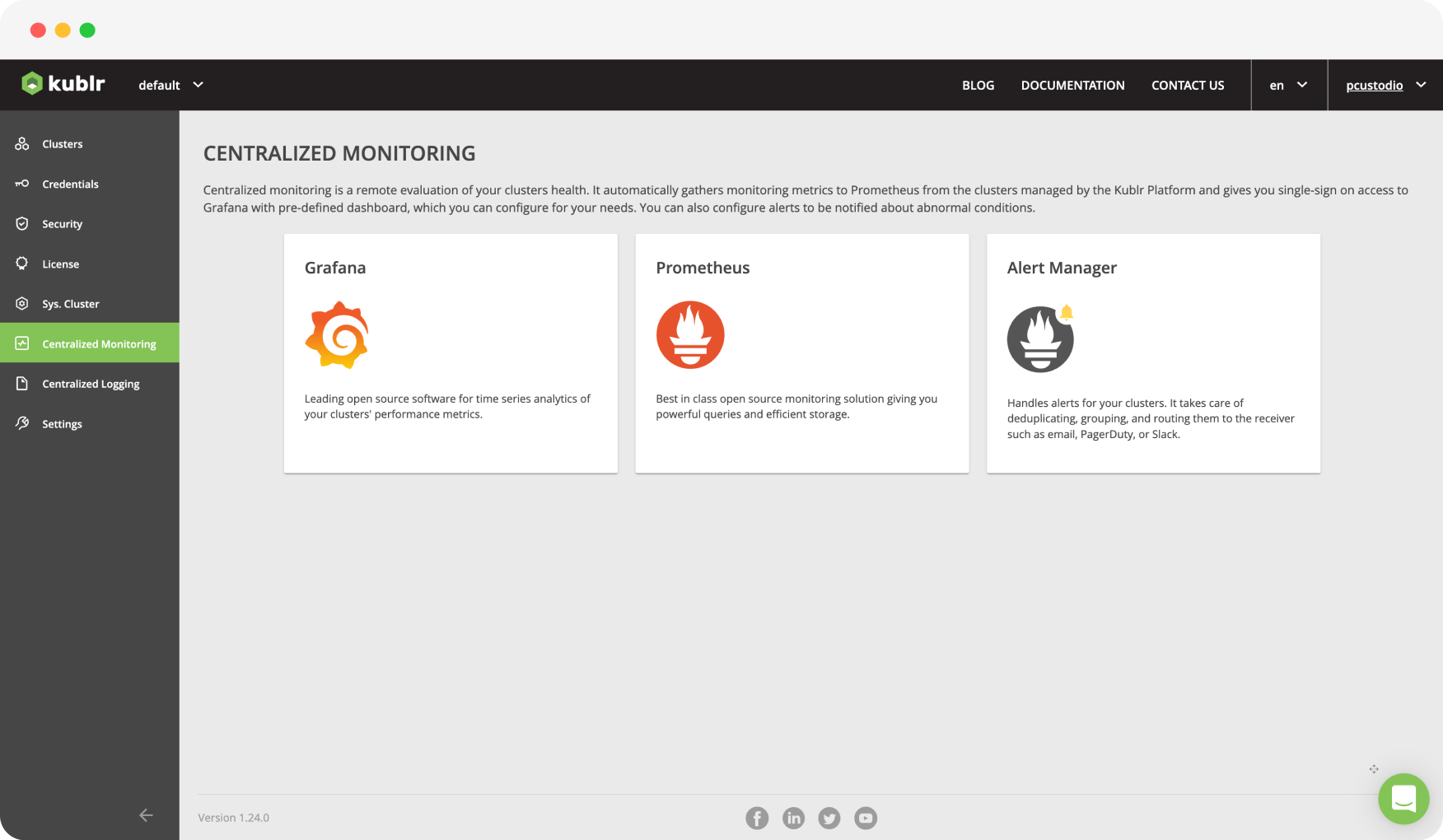

Centralized logging and monitoring

Each time a cluster is deployed, Kublr automatically connects it to log collection and monitoring components running in the Kublr Control Plane. It uses Prometheus and Elasticsearch to collect and store logs and metrics, and Grafana and Kibana to visualize monitoring information. A set of default Grafana and Kibana dashboards simplifies clusters and workload-health monitoring. Alert Manager, which integrates with your email system, Slack or PagerDuty, ensures your team doesn’t miss important alerts. Kublr’s centralized monitoring and logging can also be integrated with existing enterprise management tools.

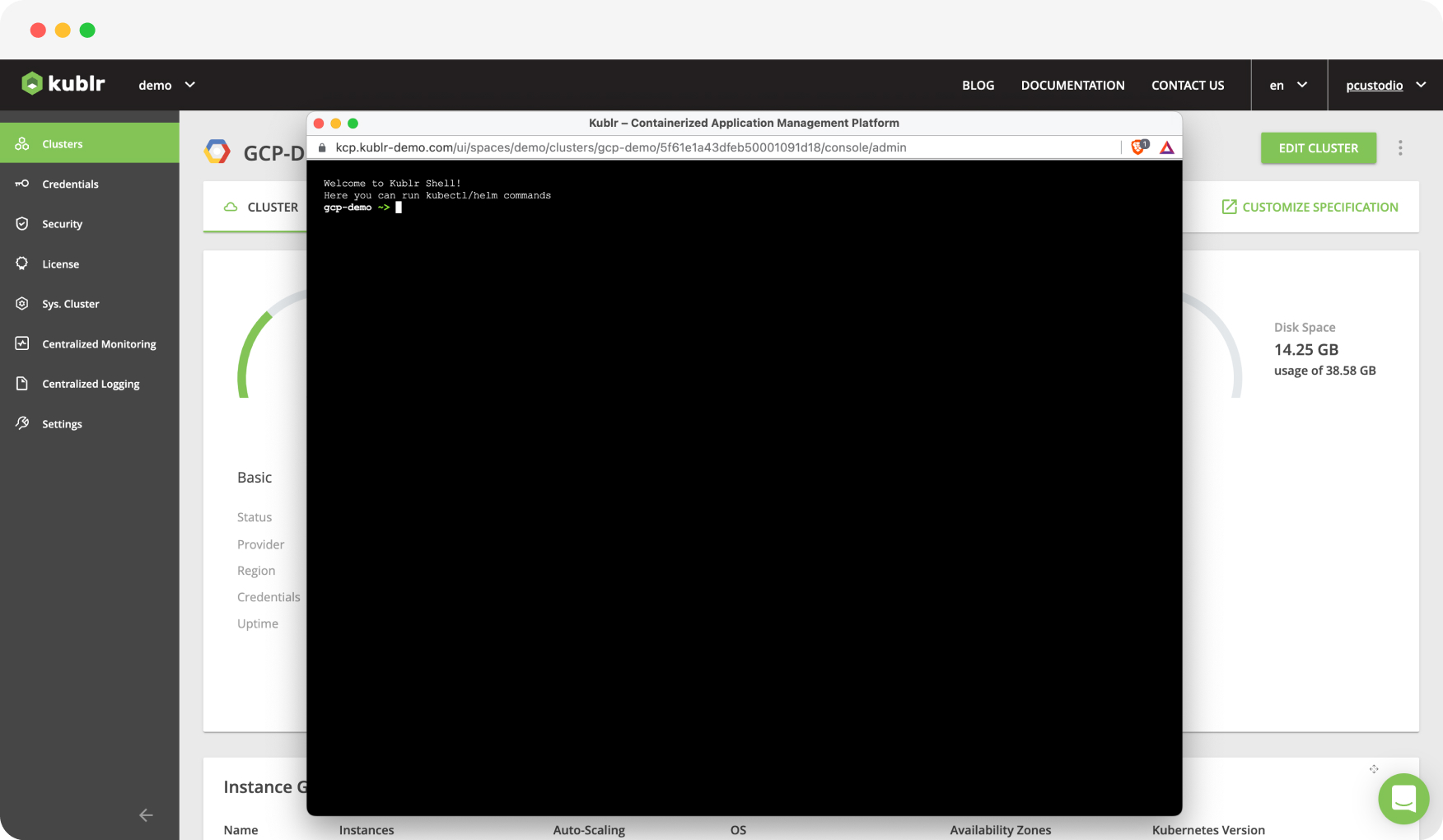

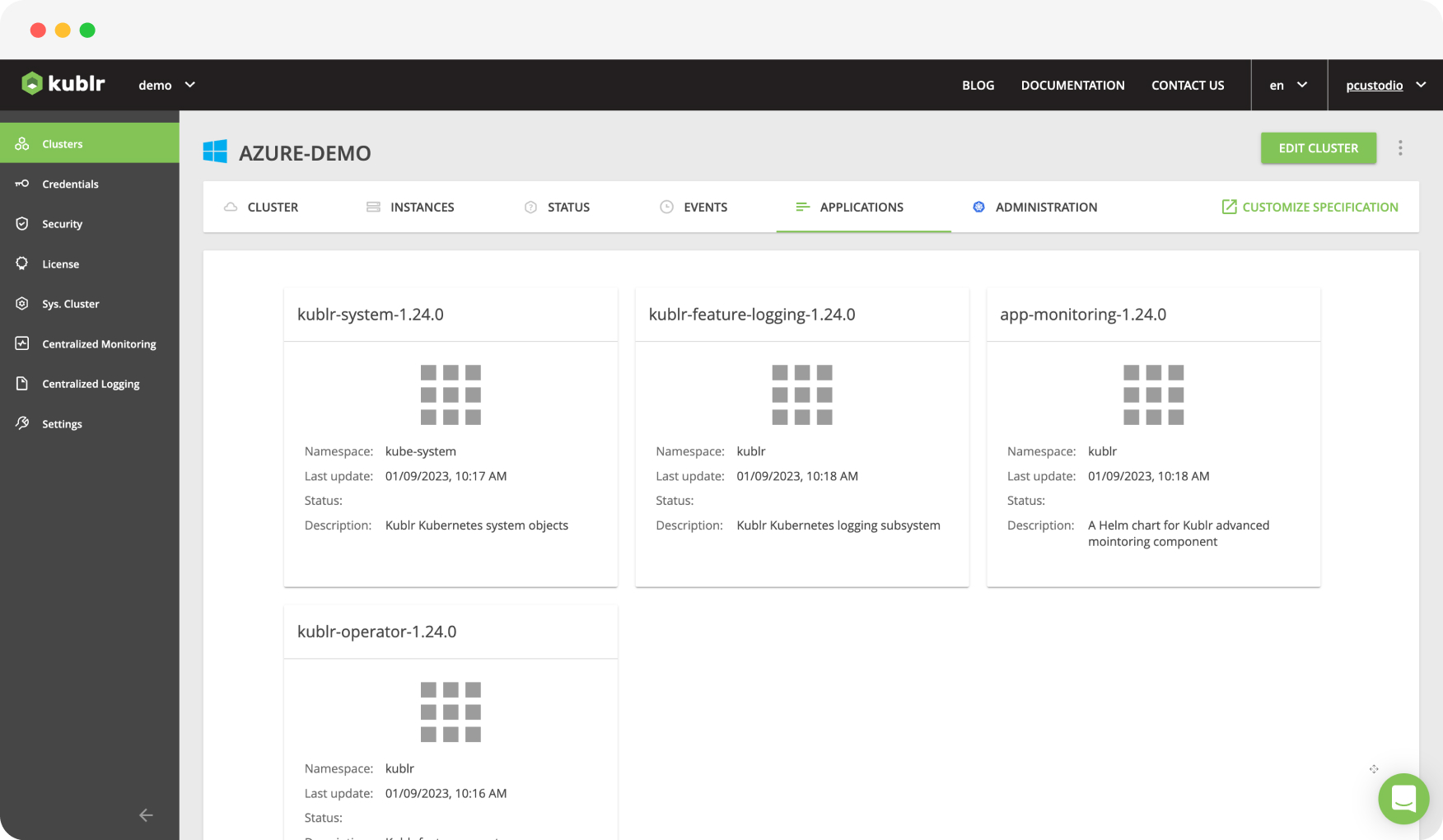

Intuitive console for your Ops team

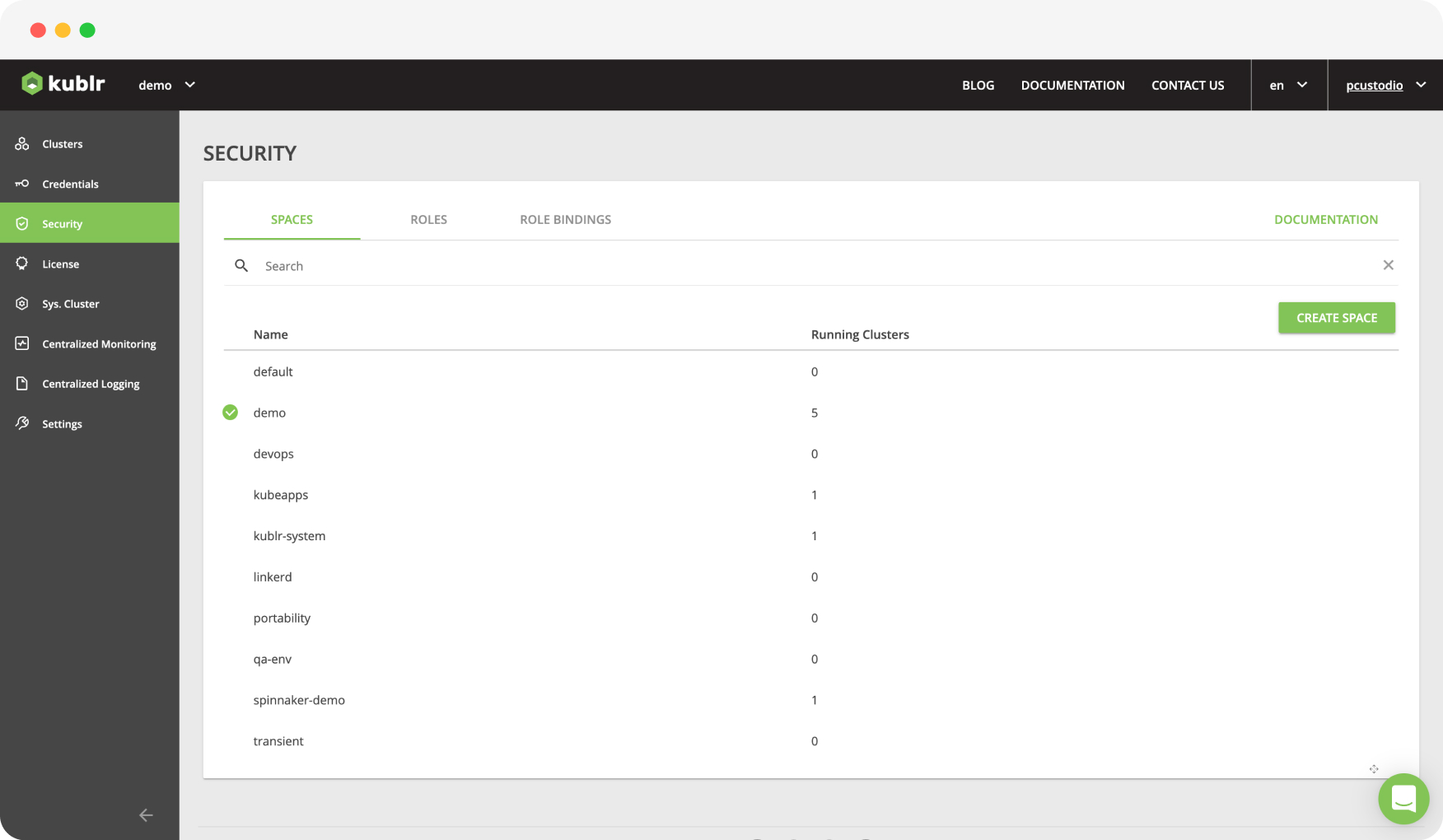

The Kublr Console simplifies Kubernetes deployment and management, providing visibility into all running clusters. Create, modify and manage clusters and their related resources from within the control plane. Clusters can be grouped into “spaces” with separate permission assigned to users based on their roles.

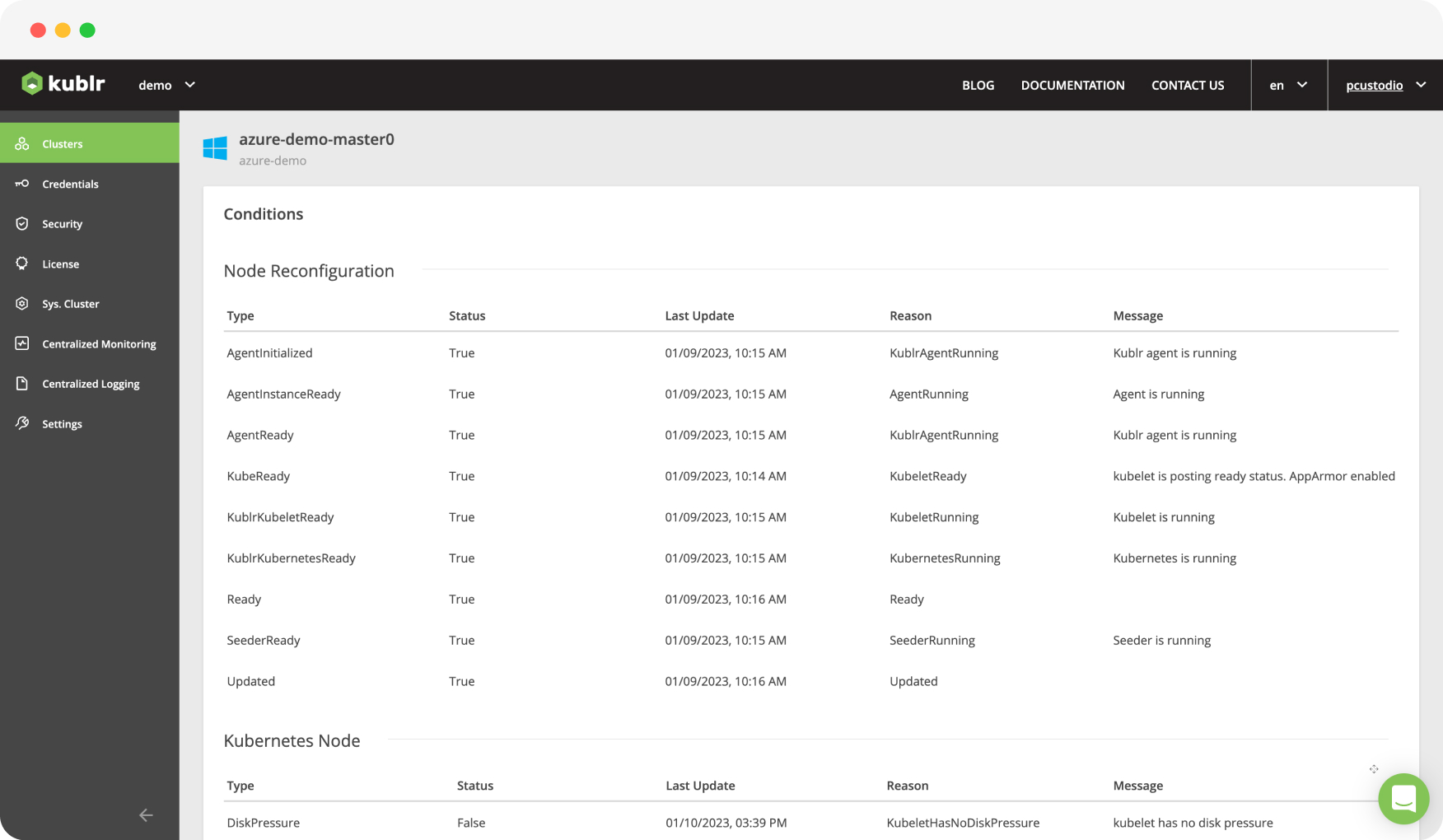

Self-healing nodes and infrastructure management

Leverage native infrastructure provisioning and automation tools to deploy self-healing and fully recoverable clusters. The Kublr Control Plane monitors clusters and infrastructure, checks if the cluster parameters (which include CPU, RAM, disk and network usage, as well as many other Kubernetes-specific parameters) are within the configured limits and alerts users in case of any deviation.

Workload portability. Standards compliance. Zero lock-in portability

Kublr runs upstream, open-source Kubernetes, enabling workload portability to other platforms and across environments. Built on cloud-native principles, Kublr is infrastructure-, OS- and technology-agnostic.

Reliable and secure cluster architecture

The Kublr agent – a single, lightweight binary installed on each cluster node – performs setup and configuration, proactively monitors health status and restores nodes in case of failure, enabling self-healing and self-orchestration. Kublr automatically sets up secure communication between master and worker nodes every time you deploy a cluster.

Enterprise security

Centrally define and manage user roles and permissions. Users can access their clusters through integrations with Active Directory, LDAP, SAML and OIDC-based authentication services or third-party single-sign-on solutions. Kublr enables granular, role-based access control (RBAC) to meet enterprise security needs. Administrators and cluster owners can set fine-grained permissions globally or per space.

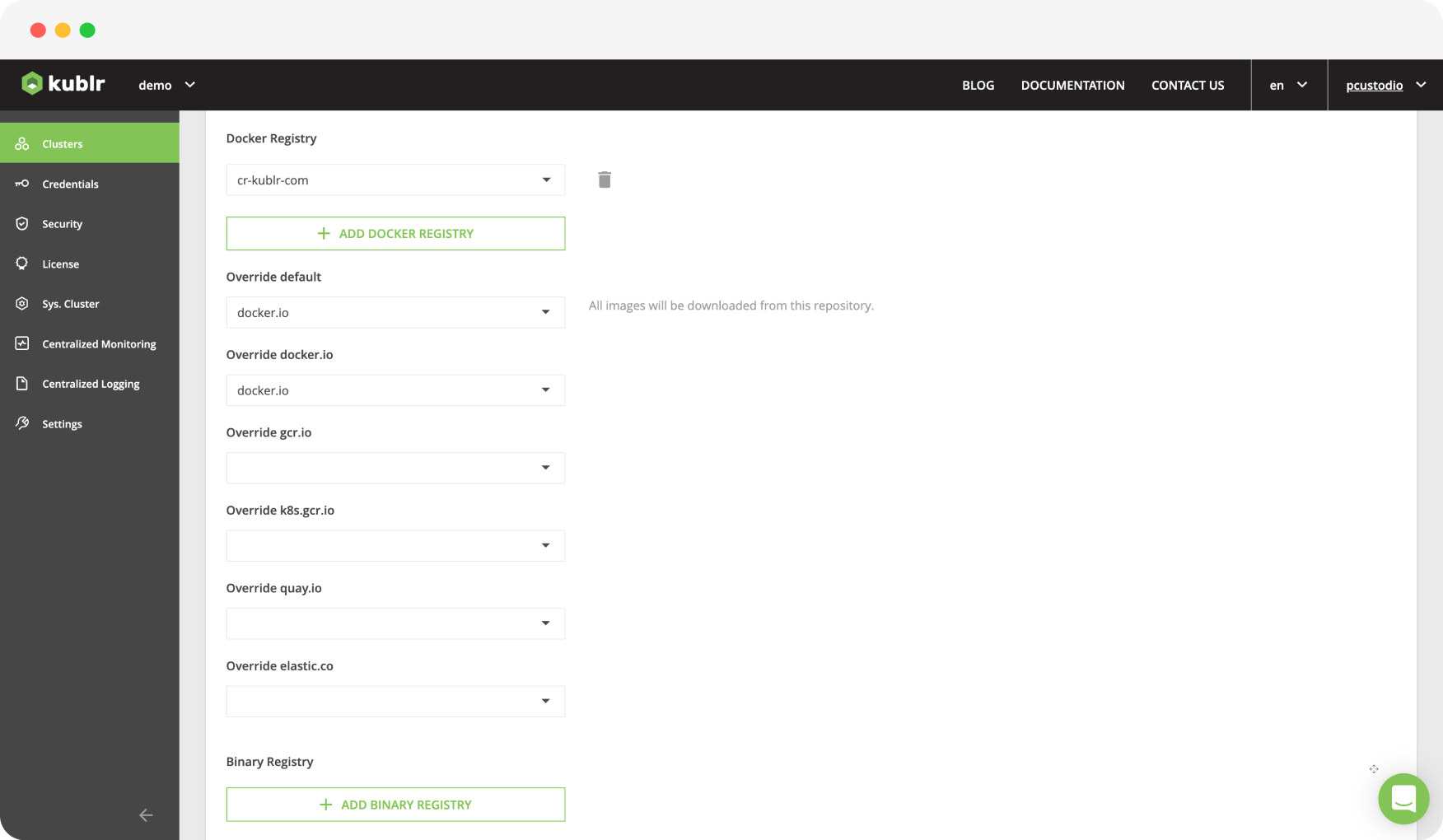

Configurable Kubernetes deployment options

Kublr enables deployment to existing VPCs/Vnets, subnets and security groups to meet security requirements for separation of control. Kubernetes cluster components like overlay networks, etcd and DNS are also configurable to meet security and operational requirements.

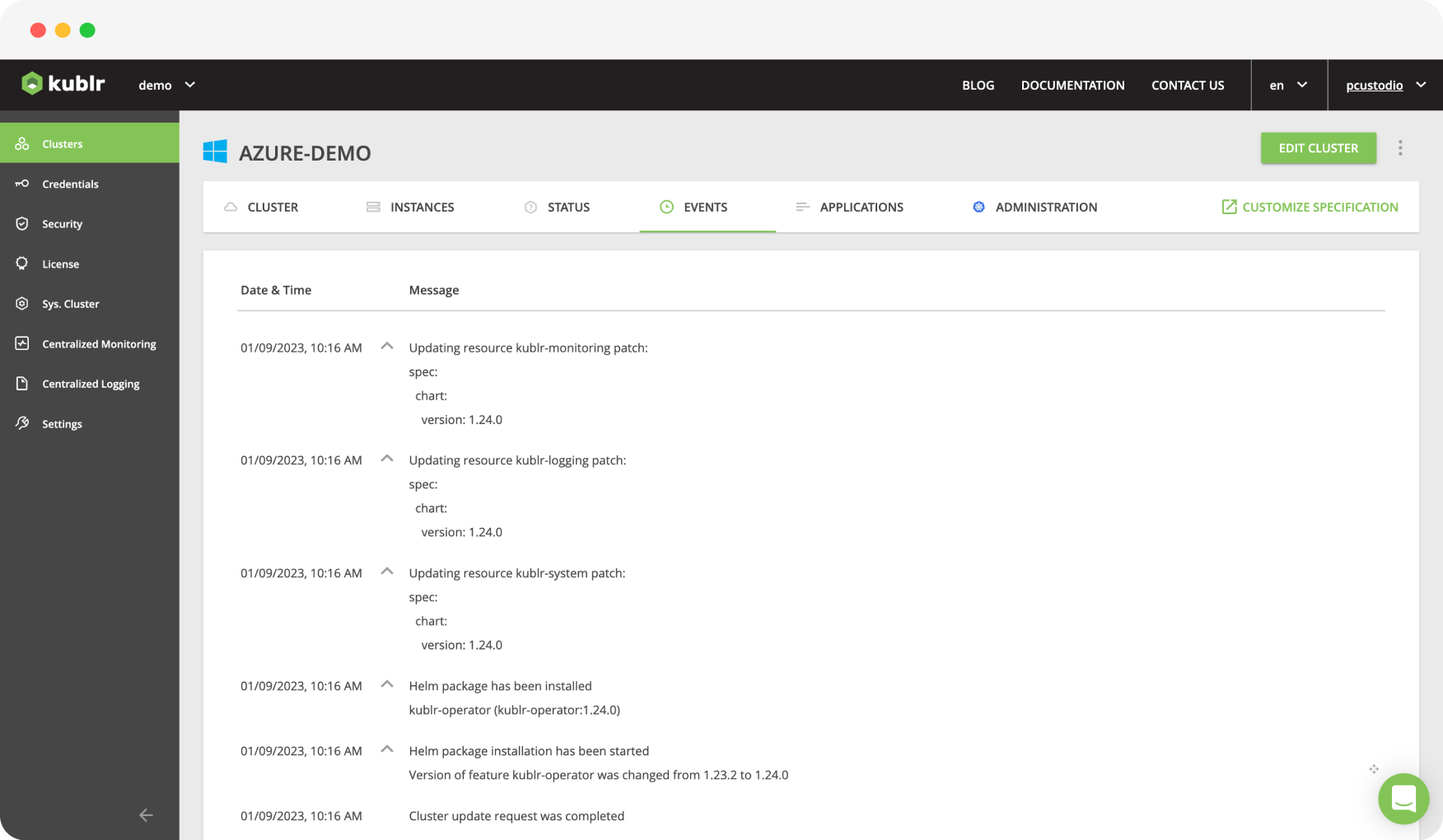

Auditing

Kubernetes and Kublr API auditing are enabled by default. Capturing every call to the Kubernetes API, organizations can keep track of system activity and user actions, ensuring a record of what changed — by whom and when — is always kept. This helps with troubleshooting issues and compliance requirements.

GPU support

Automatically detect GPU devices and configure Docker and Kubernetes to work with supported GPUs, simplifying the deployment of AI, ML and deep-learning workloads.

Cloud-native application support

Kublr-created clusters use pure, upstream, open-source Kubernetes and are extensible with any cloud-native stack, framework or tooling (e.g., Istio, Spinnaker, Jenkins, Linkerd, Ceph). We guarantee support for any Kubernetes-compatible microservices platform.

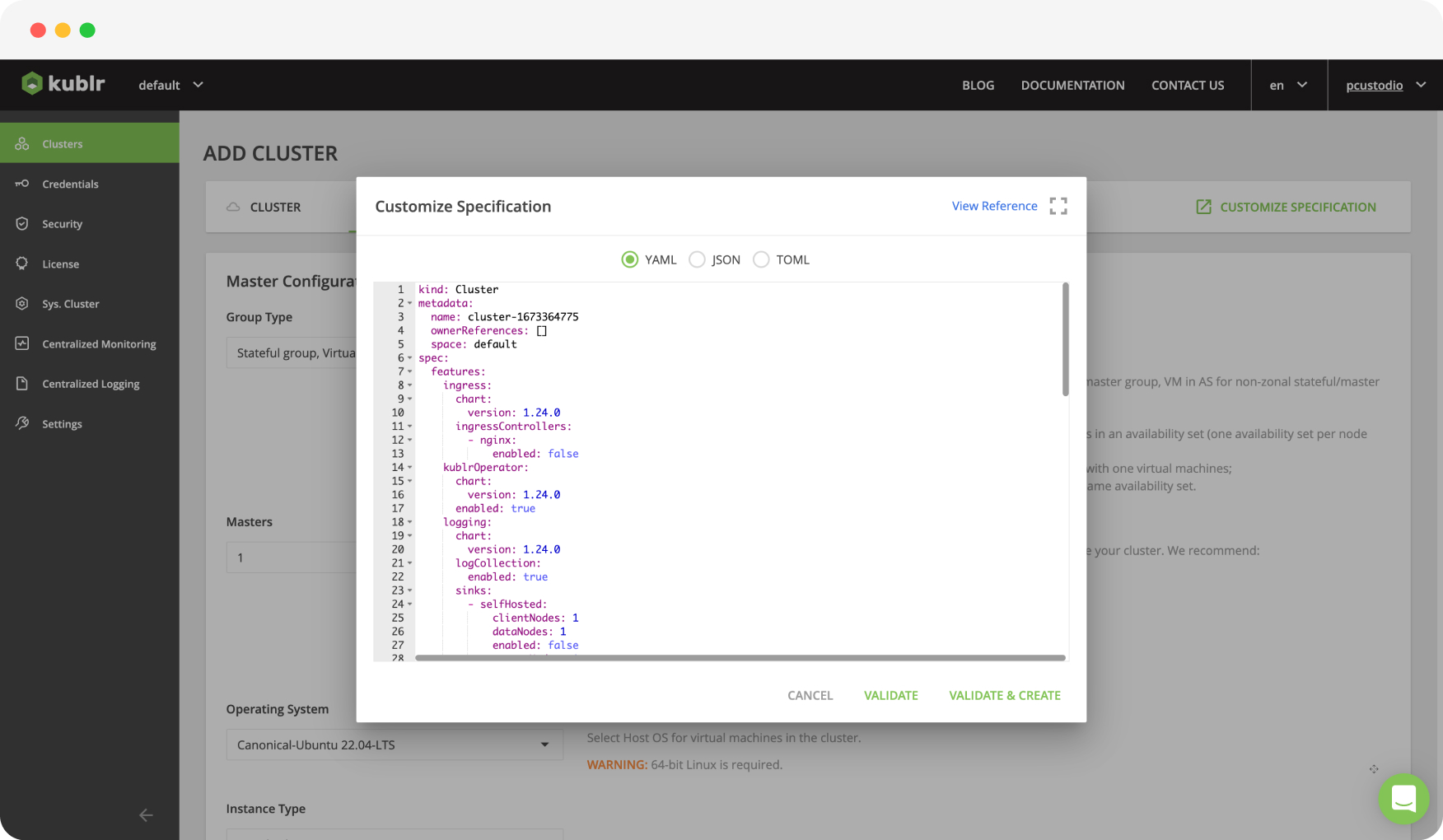

Custom cluster specification

We cannot possibly preconfigure Kublr for all possible use cases. That’s why we allow advanced users to customize cluster parameters and override specific images and specs predefined by Kublr through custom cluster specifications. This ensures Kublr works even with your most complex use cases.