With every major cloud provider adopting Kubernetes and it being offered by previous proponents of orchestration solutions like Swarm and Marathon, it’s clear that 2017 marked the end of the container orchestration battle.

There is a good reason for Kubernetes’ success. A great framework — it supports high availability, scalability, and desired state management for containerized applications and services. The community enhancing Kubernetes through the Cloud Native Computing Foundation (CNCF) has taken on key challenges around stateful applications, scalability, and environment-specific integrations and we continue to innovate.

Concerned more with runtime than set-up, Kubernetes also offers great flexibility in what components you use and how you configure them to provide core functionalities. The downside of this flexibility is its complexity.

Kublr is simplifying the complexity with the release of Kublr 1.8, which includes a self-service control plane to empower operations teams to meet the challenges of deploying, running, and managing enterprise-grade Kubernetes at scale.

The Challenges of Container Deployment, Management, and Orchestration at Scale

To the untrained eye, Kubernetes looks like it can be up and running in hours or days, but anyone who’s ever taken the time to look at Kelsey Hightower’s “Kubernetes the Hard Way” understands that hand rolling Kubernetes from scratch is quite complicated. The choices you need to make on which components to deploy, let alone, for example, the repetitive steps requiring you to log into each worker node to install dependencies, directories, binaries and to configure networking, the kubelet, the proxy, and start worker services, are time-consuming and potentially error-prone. To be fair, Kubernetes the Hard Way states that it’s meant to be a tutorial optimized for learning, not an automation solution for Kubernetes deployments.

There are other newer tooling solutions like Kubeadm or KOPS (limited to AWS) that provide some help. However, Kubeadm is still in Beta, it sets up a single master node, foregoing a highly available deployment until a later version. And it’s narrowly focused on setting up clusters, with no interaction at the infrastructure level or thought of “day two” capabilities.

For now, open source solutions tied to Kubernetes don’t come close to meeting enterprise requirements for ensuring security, high availability, logging and monitoring, and backup and disaster recovery — everything you need to make Kubernetes “production-ready.” They can’t do this across multiple environments, both on-premise and in the cloud, or integrate this functionality with existing IT systems.

Enterprise Kubernetes Features as Standard

We’ve been deploying Kubernetes clusters since it came to market. During that time, we’ve accumulated a wealth of Kubernetes knowledge — best practices for configuring clusters and components, and which components work well together, and which do not. This knowledge is embedded in Kublr’s certified architecture.

When we started building the Kublr platform, we knew we had to take care of elemental configuration issues: ensuring Identity and Access Management services (IAM), securing communication among nodes, and authenticating cluster images before putting them into production. Likewise, with logging and monitoring, and backup and disaster recovery (of both volumes and configuration data), selecting, integrating, and testing components was required. And we remain committed to building the Kublr platform with a pluggable architecture and upstream Kubernetes compliance to empower our customers to take advantage of the latest community contributions — in fact, we just certified the Kublr platform for Kubernetes 1.9 at the end of January.

Simplifying Kubernetes with the Kublr Control Plane

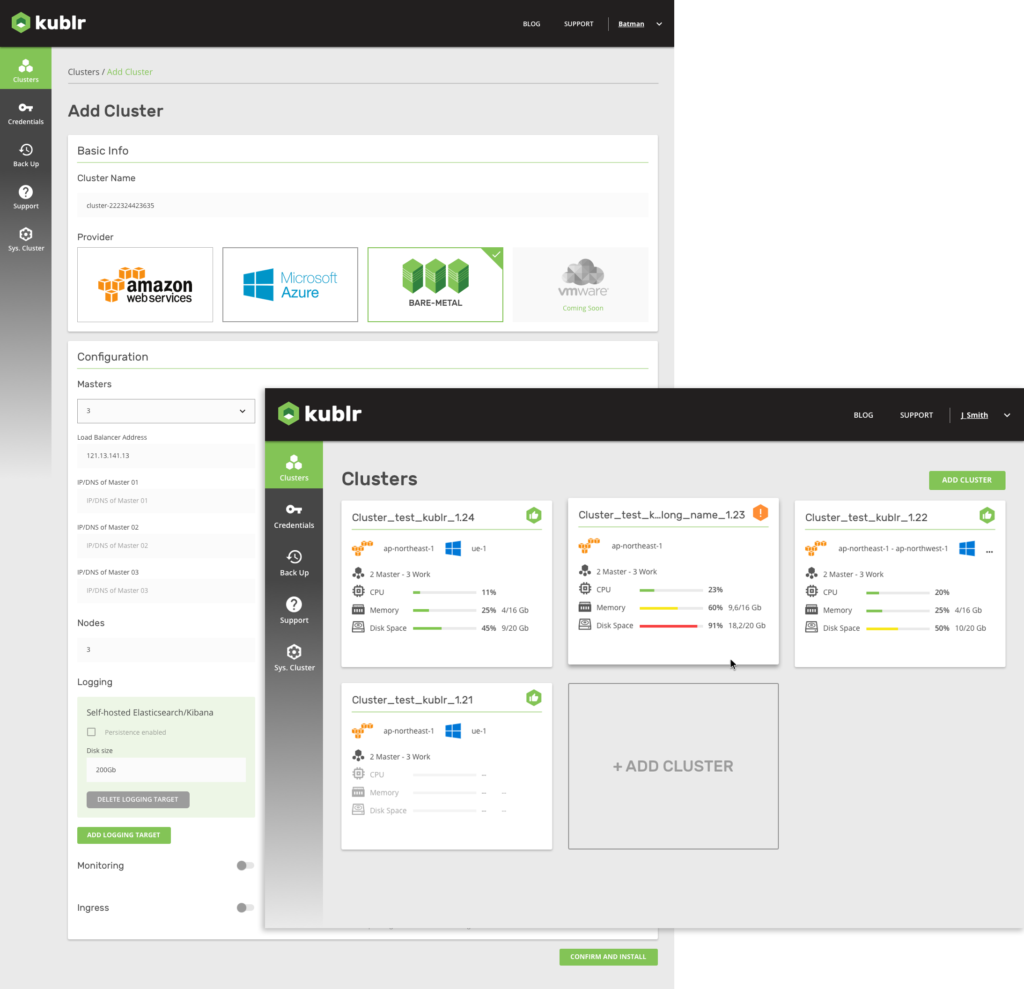

Part of the Kublr 1.8 release, the control plane takes into consideration the different levels of Kubernetes knowledge among IT staff; making secure, resilient cluster configuration deployment incredibly easy.

Through an intuitive GUI, your IT operations team can choose which environment to deploy to, select server sizes for master and worker nodes, determine the number of master and worker nodes, auto-scale if available in the environment, and set up ingress and persistent volumes as needed.

As Kubernetes usage grows, setting up and tearing down clusters quickly, reliably, and at scale becomes a critical operations capability. By automating the process, Kublr enables companies to operationalize Kubernetes and achieve the speed, scalability, and portability that containers, Kubernetes, and cloud-native architectures can provide.

Standalone Enterprise-Grade Kubernetes

Most Kubernetes solutions are tied to a single cloud, operating system, or are focused on providing a platform-as-a-service (PaaS) for your development and runtime environments. This limits their ability to empower companies to leverage the portability benefits that container technologies provide. Large organizations want to utilize a hybrid, and sometimes even a multi-cloud strategy. They are looking for a single solution that allows them to choose where to deploy their containerized applications. With Kublr, you can easily deploy, run, and manage your Kubernetes clusters on-premise or in the cloud.

Advanced Kubernetes Capabilities

When we considered what large organizations typically need, we saw that there would be more advanced requirements: auditing, multi-cluster monitoring from a single pane of glass, and in-place upgrades to new versions of Kubernetes. We also wanted to leverage the inherent capabilities of different environments. Cloud infrastructure in AWS and Azure provides access to APIs for provisioning servers and other components. Kublr simplifies deployments in these environments by integrating with AWS’s Cloud Formation and Azure’s Resource Management Templates. This integration provides runtime advantages as well as makes initial configuration easier. While Kubernetes can restart pods that fail, Kublr can restart nodes that these pods run on when required. This provides an enhanced level of high availability.

Kublr also monitors the internal operations of Kubernetes keeping an eye on resource utilization of internal components, allowing us to see how Kubernetes performs at different scales and with different workloads. Kublr’s pluggable architecture allows us to customize configurations based on workload, scale, and other application considerations. For advanced users we’re opening up Kublr’s configuration engine for command line access that we anticipate will be used by only a handful of super-users in any organization. This will enable them to provide operations staff with pre-configured templates for cluster deployments that match use case requirements.

What’s in Development?

As the CNCF Kubernetes roadmap and projects continue to enhance upstream Kubernetes and ecosystem capabilities, we’re focused on leveraging those developments and adding unique value for enterprise customers.

Here is a look at some of the Kubernetes developments we have underway:

- We’re developing enhanced scheduling capabilities for upstream Kubernetes that will enable further control and better economics.

- Though we already support multi-availability zone deployments out-of-the-box, deploying a single cluster across multiple regions is far more complex. We’ve solved this problem at a high level and are building out and testing the solution.

- Enhanced integrations with OpenStack and VMware are weeks away.

- We’re examining the use case for pre-configured applications running on top of Kubernetes and we welcome your thoughts on what would provide the most value.

- As our customers continue to use Kublr, we’re increasing our knowledge of runtime issues in different environments. Kublr’s intelligent operations will take advantage of this knowledge to provide IT operations staff with quicker problem isolation and recommended remediation actions.

Improvements to core Kubernetes and ecosystem solutions continue at a rapid pace. At Kublr, we’re focused on providing a comprehensive solution that leverages open source Kubernetes and adds extended functionalities that enterprises need.